Learning Representations for Automatic Colorization. Gustav Larsson, Michael Maire, and Gregory Shakhnarovich.

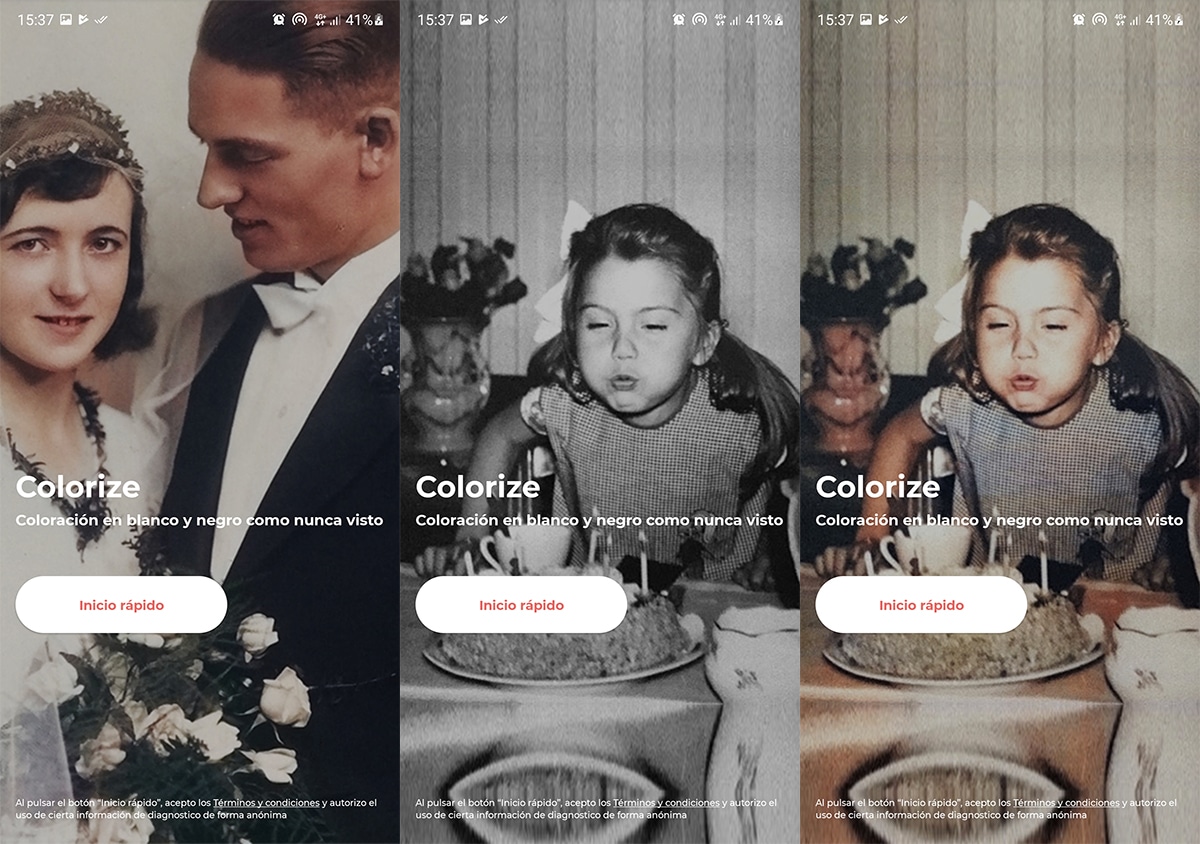

#COLORIZE IMAGES FULL#

For a more thorough discussion of related work, please see our full paper. There have been a number of works in the field of automatic image colorization in the last few months! We would like to direct you to these recent related works for comparison. Videos Lukas Graham - 7 years Impact (1949) Dorothy Dandrige - Zoot Suit (1942) Seven Samurai (1954) Īpplications Automatic colorizer Bot Reddit thread from /r/InternetIsBeautiful Colorizing an 1880 Glass Plate Colourful Past: Find and colorize historical photos If you have any examples you'd like to share, please email Richard Zhang at rich.zhang at. We have received many interesting examples and applications, developed by users! Note that the video examples are run on a per-frame basis, with no temporal consistency enforced. Click on each layer below to see the results, and let us know what you see! We found that the conv4_3 layer had the most interesting structures. He has kindly shared his results with us! The deep-dream images are grayscale and colorized with out network. This is an extension of Figure 6 in the paper.Ĭlick a category below to see our results on all test images in that category.Īlexander Mordvintsev visualized the contents of our network by applying the Deep Dream algorithm to each filter in each layer of our network. Categories are ranked according to the difference in performance of VGG classification on the colorized result compared to on the grayscale version. Here, we show the ImageNet categories for which our colorization helps and hurts the most on object classification.

#COLORIZE IMAGES PDF#

See Section 3 in the supplementary pdf for further discussion of the differences between our algorithm and that of Cheng et al.

#COLORIZE IMAGES CODE#

We were unable to acquire code or results from the authors, so we simply ran our method on screenshots from the figures in the paper of Cheng et al. We also provide an initial comparison against Cheng et al. (hovering shows our results click for additional examples) These images are random samples from the test set and are not hand-selected. Click the montage to the right to see results on a test set sampled from SUN (extension of Figure 12 in our paper). (hover for our results click for full images)Ĭlick the montage to the left to see our results on Imagenet validation photos (this is an extension of Figure 6 from our paper). We show results on legacy black and white photographs from renowned photographers Ansel Adams and Henri Cartier-Bresson, along with a set of miscellaneous photos.

This approach results in state-of-the-art performance on several feature learning benchmarks.ĮCCV Talk 10/2016, also hosted on Moreover, we show that colorization can be a powerful pretext task for self-supervised feature learning, acting as a cross-channel encoder. Our method successfully fools humans on 32% of the trials, significantly higher than previous methods. We evaluate our algorithm using a "colorization Turing test," asking human participants to choose between a generated and ground truth color image. The system is implemented as a feed-forward pass in a CNN at test time and is trained on over a million color images. We embrace the underlying uncertainty of the problem by posing it as a classification task and use class-rebalancing at training time to increase the diversity of colors in the result. We propose a fully automatic approach that produces vibrant and realistic colorizations. This problem is clearly underconstrained, so previous approaches have either relied on significant user interaction or resulted in desaturated colorizations. Given a grayscale photograph as input, this paper attacks the problem of hallucinating a plausible color version of the photograph. Please enjoy our results, and if you're so inclined, try the model yourself! There has been some concurrent work on this subject as well. We include colorizations of black and white photos of renowned photographers as an interesting "out-of-dataset" experiment and make no claims as to artistic improvements, although we do enjoy many of the results! This is partly because our algorithm is trained on one million images from the Imagenet dataset, and will thus work well for these types of images, but not necessarily for others. Some failure cases can be seen below and the figure here. However, there are still many hard cases, and this is by no means a solved problem.

We believe our work is a significant step forward in solving the colorization problem. Welcome! Computer vision algorithms often work well on some images, but fail on others.

0 kommentar(er)

0 kommentar(er)